- Home

- Innovation

- On Systems & Strategy

On Systems & Strategy

High-quality strategies in ecosystems offer four things: loud feedback; flexibility in acceptable outcomes; shared indicators for failure and success; recognized connection points.

Read Next

The Changemaker's Skills Maturity Matrix

This development tool is designed to give change-agents inside organizations clarity into their path forward, help them define and deepen strengths, and maybe give us some shared language about what we do.

Five Org Design Things N° 10

Pattern languages and org analysis; RTO is bad, even if offices are good; old maps made 3D; diverging values worldwide; exit interviews

Five Creativity Things

Challenges facing creativity; owning ideas from beginning to end; opinionated palettes; are we doing zines again?; randomness that didn’t fit in the first four categories

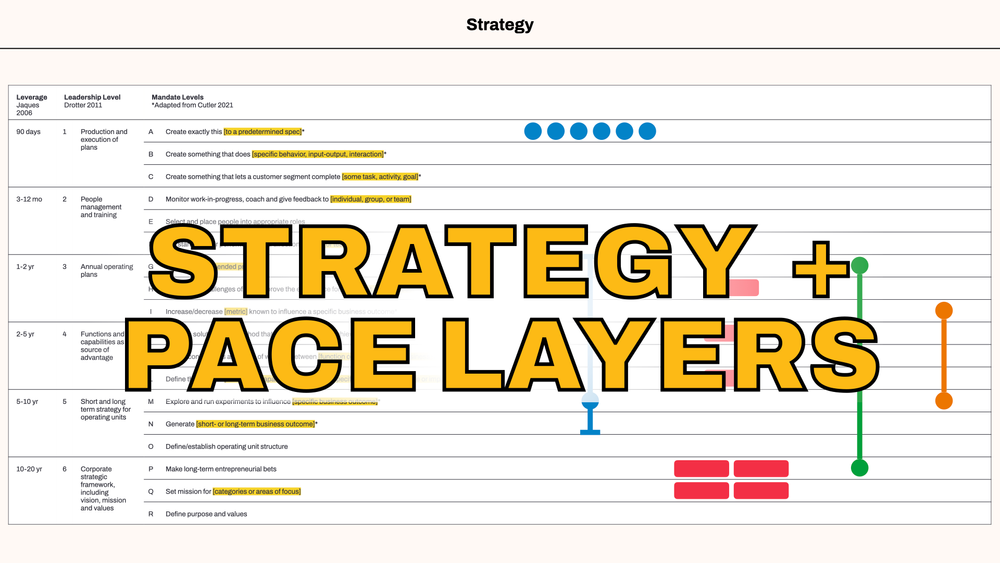

How to Combine Pace Layers, Org Design, and Strategy

4 ways to use Pace Layers in strategy and OD work: 🚀 As a career planning tool; 🎓 As a strategy tool; 🔬 As a diagnostic or sense-making tool; 🎨 As a design tool for value-adding layers.